Exploring the Premature Use of Facial Recognition in Law Enforcement Across Africa

This article, contributed by Chiagoziem Onyekwena, a solutions architect specializing in AI and author of get.Africa, delves into the complexities of applying facial recognition technology (FRT) in Africa’s law enforcement landscape.

The Global AI Race and Africa’s Role

As American and Chinese companies vie for supremacy in artificial intelligence (AI), Africa is increasingly viewed as a pivotal player in shaping the outcome. However, while Chinese companies have made significant strides in the region, American initiatives lag behind. Notable efforts, such as Google’s AI lab in Ghana and IBM’s research offices in Kenya and South Africa, remain exceptions rather than the norm, creating a vacuum that Chinese tech giants like Huawei have exploited.

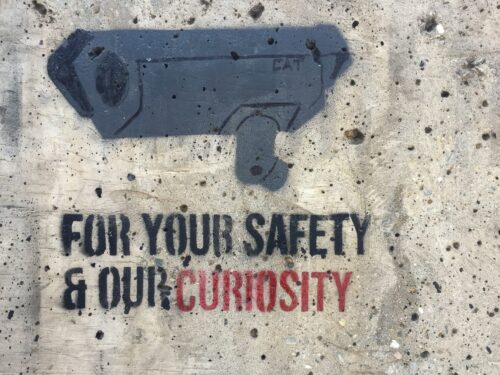

In 2015, Huawei launched Safe City, its flagship public safety platform featuring video AI and facial recognition capabilities. Promoted as “Big Brother-as-a-service,” Safe City has expanded to 12 African cities by 2019. Huawei cites Nairobi, Kenya, as a success story, claiming a 46% reduction in crime following the program’s implementation. Despite these achievements, critics raise concerns about surveillance risks, privacy violations, and the lack of transparency in measuring the system’s effectiveness.

The Bias Behind Facial Recognition Technology

A 2020 incident in Michigan, USA, involving Robert Julian-Borchak Williams highlights the risks inherent in facial recognition. Misidentified by an AI system as a shoplifting suspect, Williams was wrongfully arrested. This case underscores the prevalence of cross-race identification bias—where individuals struggle to distinguish features of people from other racial groups—now mirrored in AI systems.

Studies reveal that facial recognition often misidentifies Black individuals at rates significantly higher than White individuals. Despite advancements in machine learning, racial biases rooted in training data and historical inequities persist. In the U.S., public outcry after high-profile incidents, including the killing of George Floyd, led tech giants like IBM, Microsoft, and Amazon to halt facial recognition development for law enforcement. Yet, in Africa, where most populations are Black, the stakes for error are even higher.

Addressing AI’s Biases

AI systems are inherently shaped by their training data, which often reflects existing social biases. For example, Western and East Asian algorithms tend to perform better at recognizing faces from their respective regions, illustrating the influence of biased datasets. Additionally, the underrepresentation of Black people in online datasets exacerbates error rates in identifying Black faces.

A lesser-known factor is rooted in the history of photography. Early photographic technology was optimized for lighter skin tones, a legacy that continues to impact digital imaging and, by extension, AI algorithms today. This “Black photogenicity deficit” further limits the accuracy of facial recognition systems.

Despite these challenges, Chinese AI solutions deployed in Africa operate without the same scrutiny as Western technologies. Unlike in the U.S., where public pressure led to accountability measures, Chinese companies face little demand for transparency in error rates or the consequences of their systems on African communities.

The Way Forward

Africa’s embrace of AI-powered surveillance demands a reevaluation of its implications for justice and privacy. Without addressing the inherent biases and lack of transparency in facial recognition technologies, the risk of wrongful arrests and convictions could grow, mirroring—or even exceeding—the challenges seen in Western countries. For African cities to truly be “safe,” a careful balance between innovation and ethical considerations must be struck.